Videos contain a lot of information but there is no effective technique to retrieve that data. For example, Target has a few Petabyte (1e+3 Terabyte) of their surveillance cameras and they want to analyze customer’s behaviors at gaming section at curtain time. The task becomes impossible because of the size of data and the cost will be tremendous. Another example is how to search for a specific scenario in a movie. Until now, users still have to do everything manually. So what is the solution?

At Teradata Hackathon on March 3, 2017, I came up with the idea of tagging meaningful information into Videos and save it as video’s metadata. The idea is very simple and low-cost to implement and maintain.

I use OpenCV to get all the different frames in the videos, upload to AWS S3, then send the requests to AWS Rekognition to get the labels and their confidences.

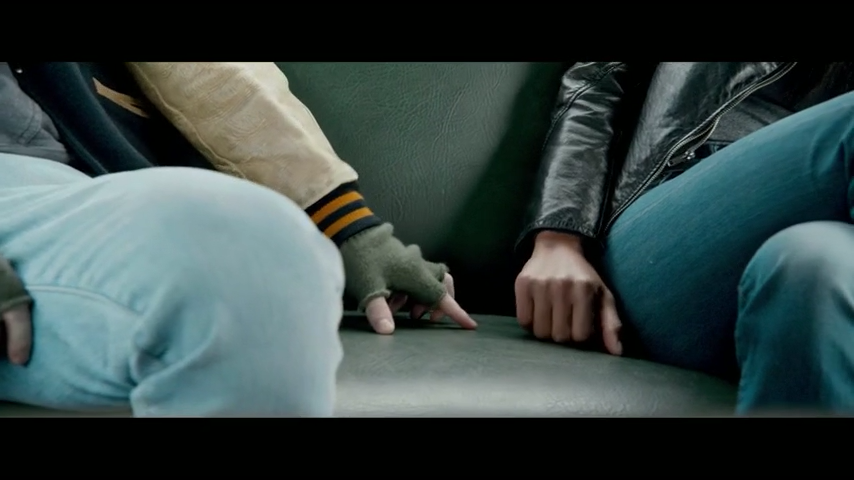

When we have the labels, we tag that frame with the received information.

{

“Labels”: [

{ “Confidence”: 98.7389907836914, “Name”: “Couch” },

{ “Confidence”: 98.7389907836914, “Name”: “Furniture” },

{ “Confidence”: 71.52261352539062, “Name”: “Finger” },

{ “Confidence”: 64.42884826660156, “Name”: “Ankle” },

{ “Confidence”: 53.46735763549805, “Name”: “Cushion” },

{ “Confidence”: 53.46735763549805, “Name”: “Home Decor” },

{ “Confidence”: 53.46735763549805, “Name”: “Pillow” } ],

“OrientationCorrection”: “ROTATE_0”

}

To process a query from users, we can search for the keywords and jump to the frame that contains that label within some level of confidence.

We can build a cloud storage service for audios and videos so that when user upload media files, the service running on the back end can analyze and tag the information to the received portion of the files. Doing this way will save time and end users do not need to wait for extra time to be able to search for media’s content.

I only have about 24hrs for the project, so we only finish the proof-of-concept with videos. However, the same idea can apply to audio to make audio’s contents searchable. We also can make a more advantage app to recognize some specific objects, such as someone’s face or someone’s voice, in a videos or audios.

Update: 5 days later, on March 8th, at the Google Cloud Next Conference, Google announce its API that doing the same idea on Videos. More info can be found here.

Google’s new machine learning API recognizes objects in videos

The code is very simple, was written in Python, can be found on my github:

https://github.com/nguyenbuiUCSD/Searchable-Media